Kubernetes 集群中,在节点异常时(notready)controller-manager中的node_lifecycle_manager会为该节点增加污点,用于驱逐节点的Pod

1 | Taints: |

最近在测试过程中出现,节点notready后,运行在节点的Pod不会被删除,定位发现节点的taint只会存在一个,由于没有Effecf为NoExecute的taint,因此运行在节点的Pod是不会触发驱逐的。

1 | Taints: |

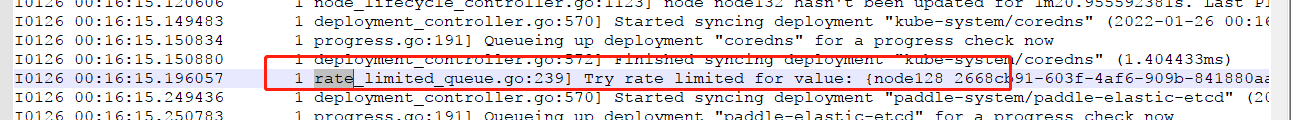

调整kube-controller-manager的日志级别为10,集群中有3个计算节点,当2个计算节点异常时,这两个节点能够被正确打上taint,但是当将第三个节点异常时,节点并不会被打上NoExecute的taint。出现了限流的提示,如下所示:

继续向上查看日志,可以看到集群进入了PartialDisruption 状态,此时对节点的处理进行了限流,不再处理这个节点

集群进入statePartialDisruption 状态后,不会再触发节点pod驱逐

看一下源码:目前我们集群没有配置zone信息,默认一个zone,集群节点notready 个数大于55%时,集群进入statePartialDisruption 状态

1 | // ComputeZoneState returns a slice of NodeReadyConditions for all Nodes in a given zone. |

当集群进入statePartialDisruption 状态后,对异常节点的处理调整队列的限流参数

1 | //判断集群异常比例,进入statePartialDisruption状态 |

在小规模集群时,如果还希望异常节点的Pod能够被正确驱逐,可以调整kube-controller-manager的参数unhealthy-zone-threshold=1